Augmented Reality for Visually Impaired People

For the capstone research project of my Berkeley master’s degree, I chose to examine how augmented reality could be used to aid blind and visually impaired people in indoor navigation. I worked alongside 3 graduate students and 4 undergraduates to conduct user research, design, prototyping, and user testing of the AR for VIPs system, and created an award-winning video demonstrating the system and its necessity.

You can see our final deliverables below. Scroll on for the full story!

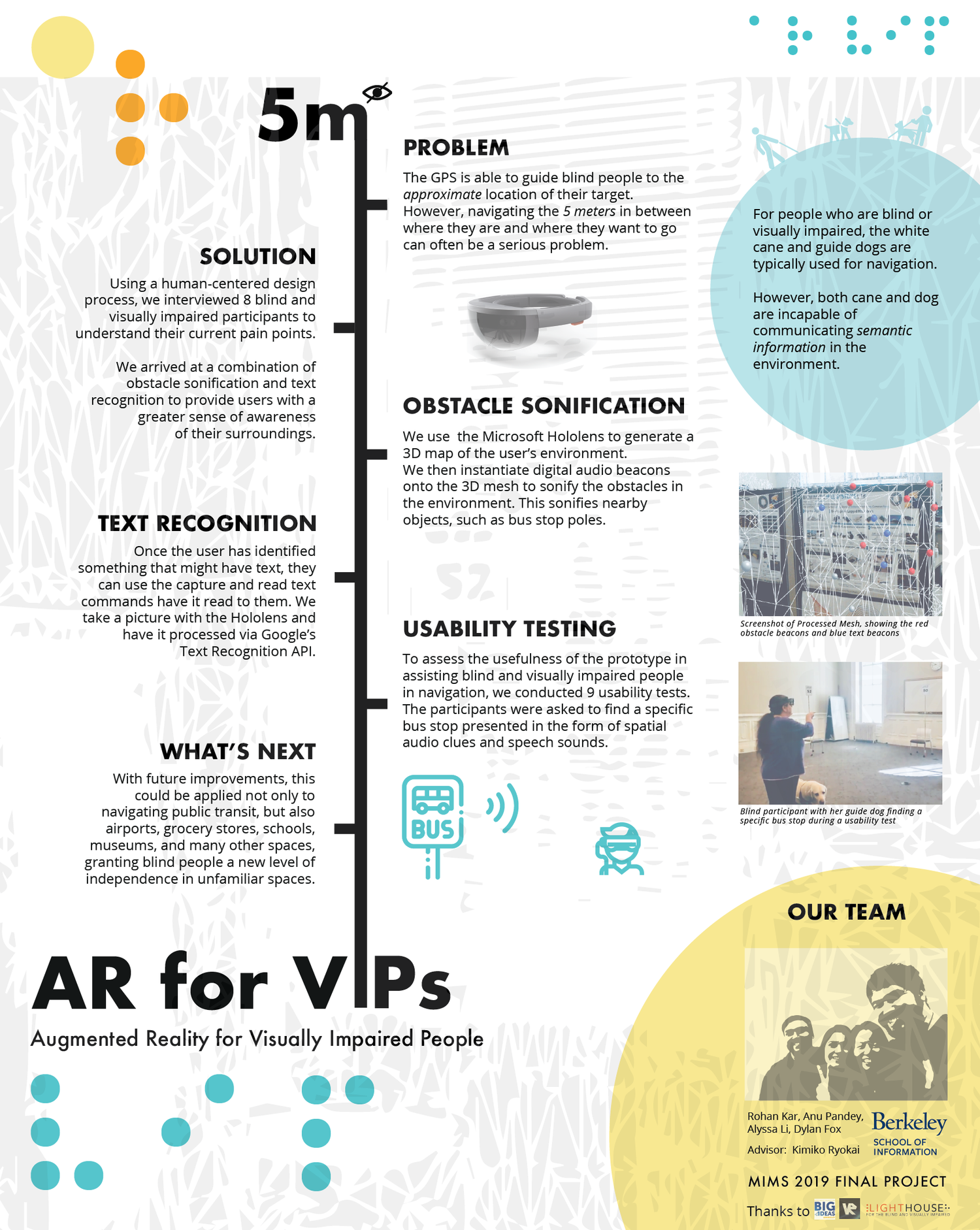

Our research poster explaining the basics of AR for VIPs. It describes the 5 meter problem for visually impaired people navigation, our solution incorporating obstacle sonification and text recognition, and our usability testing and future improvements.

Our final report contains the full information about the project and its outcomes.

(57 pages including appendices)

Audio descriptions available here

Augmented Reality for Visually Impaired People (AR for VIPs) is a research project from the University of California's School of Information that seeks to put augmented reality to use as an accessibility tool for people with low vision or blindness.

Presentation of Augmented Reality for Visually Impaired People (AR for VIPs) at UC Berkeley's School of Information MIMS capstone presentations 2019.

Project at a Glance

Table of Contents

Timeline: 9 months, September 2018 - May 2019

Project Type: Capstone Research

Team: myself, three other graduate students, and four undergraduate students

My Role:

- Spearheaded initial research proposal

- Conducted user research & existing solution benchmarking alongside team

- Design of initial system with other designer

- Project manager & development lead for prototyping

- Assisted user testing

- Production of award-winning demonstration video

Tools: Unity, C#, Adobe After Effects, Microsoft HoloLens

Context

The Challenge

For the roughly 36 million blind people around the world, navigating unfamiliar spaces can be a frustrating or dangerous experience. Though people can learn to navigate cautiously through sound and touch, many places assume that their visitors can quickly see layouts and features, and offer no substitute to printed signs and other purely visual navigational necessities.

Augmented reality holds the potential to alleviate these problems. Though no silver bullet, we set out to prove that modern AR headsets’ capabilities to construct 3D maps of surrounding spaces and access machine learning tools such as text recognition could prove a powerful addition to classic tools like the white cane and guide dog.

“AR devices might know facts about the environment that blind people wearing them don’t. How could we usefully identify and transmit that knowledge?”

We thought this would be a worthwhile challenge for our UC Berkeley School of Information Capstone Project, a project all MIMS master’s students must complete to graduate from the I School.

Problem Definition

Perkins School for the Blind Bus Stop Challenge.

Audio descriptions available at https://youtu.be/NOjUsunoVbk

Of course, this is a huge problem space. Ultimately, we were inspired by the Perkins School for the Blind Bus Stop Challenge to focus on the “5 meter problem”: how blind users can bridge the final ~5 meters between the approximate location their GPS guides them to and the actual location of their goal.

We defined our success criteria as the user being able to:

Identify the location of simple obstacles, such as bus stop poles, from a distance

Read semantic information written on these obstacles in order to identify their goal

Navigate to their goal

Note that due to the constraints of the generation 1 HoloLens (see “Constraints” below), we knew from the start that any prototype we made would be effective in controlled environments only, and would not be viable as an actual product for at least several years. Thus, our goal was to conduct research that could inform future accessible design for AR, not release a consumer product.

Users

We aimed for our application to be useful to both totally blind and low-vision people by implementing a purely audio interface. (Visual interface elements seen in screenshots and videos were for testing and illustration purposes only.) In doing so, we hoped that our interface would be compatible with sight-based vision aids such as contrast enhancement. Unfortunately, it also meant that our app would only be useful to blind people with some amount of hearing ability.

Team and Role

Core Team

Our core team consisted of myself and three other master’s students in the School of Information. We ran the project from start to finish.

Myself, XR designer, lead developer and project manager

Alyssa Li, XR designer with a focus on sound design

Anu Pandey, user researcher and healthcare professional

Rohan Kar, XR developer and machine learning expert

Secondary Team

Our secondary team consisted of four undergraduate students from Virtual Reality at Berkeley, Berkeley’s undergrad XR association, who assisted in development.

Rajandeep Singh, spatial mapping

Manish Kondapolu, text recognition

Elliot Choi, sound design

Teresa Pho, raycasting

Advisors

In addition to those actively working on the project, we received advice and guidance from many sources.

Berkeley professor Kimiko Ryokai served as our academic advisor, offering advice on many aspects of the project throughout its development. Professors John Chuang and Coye Cheshire also gave invaluable help. Professor Emily Cooper of the School of Optometry’s sight enhancement HoloLens research proved a valuable reference throughout the project, and she was kind enough to lend us a HoloLens for testing purposes.

Microsoft Principal Software Engineer Robert “Robin” Held and Senior Audio Director Joe Kelly helped immensely with their detailed knowledge of HoloLens systems.

Lighthouse Labs Technology Director Erin Lauridsen gave us great feedback and connected us with many people in the blind & low vision tech community to speak and test with.

National Federation of the Blind’s Lou Anne Blake, Curtis Chong, and Arielle Silverman gave us great advice on which use cases to focus on.

Berkeley Web Access’ Lucy Greco was a huge help, offering us advice on several occasions and testing our prototype as well.

My Role

Overall, I took on a leadership role in the project.

I originated the project as a generalized push for XR accessibility, searched out an advisor, and recruited the other three graduate members to the team.

I served as project manager, leading meetings identifying long term and short term goals, assigning tasks, and ensuring we were on track to meet them.

Together with Alyssa, I designed the overall interface and functionality.

I coordinated the development of individual components and wrote code to combine them together, as well as developing the interface itself.

I edited our project video, which won the School of Information Capstone Video Award.

I introduced the project at the public I School Capstone Presentations.

Constraints

Time & Funding

As a student research project, we had no funding to speak of, and our applications for grants were rejected. Fortunately we had access to development space & hardware through Virtual Reality at Berkeley, but this constrained us to what additional supplies we could afford out of pocket. Similarly, though the capstone research was our main priority during the last semester, we all had multiple other time commitments, and had to juggle other classes and graduation preparations while working on it.

Blind User Access

As none of us on the team were blind or visually impaired, we knew we had to involve blind and low vision people early and often to make sure that we were designing with them, not at them. However, this made getting feedback and review from blind users something that had to be arranged well in advance and typically required travel. With a blind person on the team, we could have had significantly tighter feedback cycles.

“We knew we had to include blind and visually impaired people in the whole process, so that we’d be designing WITH them and not AT them.”

HoloLens Limitations

HoloLens Development Edition

We were using the original HoloLens Development Edition for most of our work, which had the following key limitations:

Slow scanning speed - it took slowly and deliberately scanning back and forth to build up an accurate mesh, effectively requiring pre-scanned areas to be useful.

Poor outdoor performance - due to the sun’s infrared rays interfering with the HoloLens’ scanning capabilities, we were limited to indoor scanning. This meant that scanning actual bus stops would not be effective.

Heavy weight/poor ergonomics - every single blind user we tested with commented that the HoloLens would be uncomfortable to wear for more than a few minutes.’

Blind-inaccessible setup - the HoloLens interface was not usable by non-expert users without vision, meaning we would have to launch and monitor the app for blind users.

Put together, these limitations meant that we knew our project would only be usable in strictly controlled conditions. A consumer-ready product would likely not be possible on this hardware. However, the project would still have utility as research, paving the way for products to come.

Design Process

Prologue: Exploring XR Accessibility

One of the cardinal rules of UX design is to start with user needs and find technologies that fit them, not vice versa. We broke that rule with this project; here’s why.

Initial Exploration

The School of Information prompt for capstone research is pretty liberal: it simply asks for “a challenging piece of work that integrates the skills and concepts students have learned during their tenure.” At the start of my final year, I only knew that I wanted to work on something related to XR accessibility. I wasn’t sure if it would shape up to be a set of guidelines for developers like AbleGamer’s Includification Guidelines, an accessibility add-on to Unity or the Virtual Reality Toolkit, or something else entirely, but I dove in headfirst into exploration, and soon recruited Anu and Alyssa to the cause.

Insight: Information Asymmetry in AR

While we were researching, an idea caught our attention. AR devices are constantly scanning the environment in order to build up a 3d representation in which to place imagery. For sighted users, these would hold little new information; but for blind users, the AR device knows many things about the environment that they do not. If we could figure out which 3d mapping data were useful, and when, we could exploit this information asymmetry to have the AR device communicate useful information to a blind user in real time.

Idea: AR device environmental scans could hold useful information for blind people

Hackathon Experimentation

We soon had a chance to prototype this theory. Alyssa and I, along with classmates Soravis “Sun” Prakkamakul, Neha Mittal, and Ankit Bansal, entered the Magic Leap AT&T Hackathon in early November 2018. The organizers wanted to show that AR can be for more than simple entertainment purposes. Our submission, Eyes for the Blind, was a rudimentary exploration of sound feedback based on spatial mapping; with practice we were able to successfully wander around the exhibition hall with our eyes closed without bumping into anything, and nearly managed to integrate text recognition. We knew this idea had potential, and we had an inkling of how we could go about it.

Our Magic Leap Hack team testing our initial theory.

Between the hackathon and our initial research trajectory, we ended up focusing on figuring out how AR technology - specifically headsets with advanced spatial mapping capabilities like the HoloLens and Magic Leap - could act as accessibility devices for blind and low-vision users. Although this was a tech-first approach, we felt that showcasing these devices’ potential for accessibility was worthwhile and could lead to better access to these kinds of tools for blind users in the future.

Research: People, Papers, and Products

Though we had a prototype in hand after the frenzy of the hackathon, we wanted to be sure that our project was on solid ground. To do so, we stowed our prototype and went back to basics, looking to three key sources to learn the lay of the land when it comes to low-vision accessibility: people, papers, and products.

Blind People & Organizations

Straightaway we made contact with the organizations mentioned in the “advisors” section above. We wanted to understand which technologies were commonly used and what user needs they left unfilled. Ultimately, we had five interview sessions with 1-4 interviewees per session. Our key takeaways:

The guide dog and white cane are the gold standards of accessibility technology, relied on by millions of people. New tools should attempt to supplement, not replace, these traditional tools.

While gathering physical information about nearby objects can be useful, identifying and gathering semantic information such as text in their immediate environment is a frequent unmet need for blind users.

For blind people, sound is a valuable resource. Interfaces that carelessly make noise and cover up important environmental sounds can be annoying or even dangerous for blind users.

Some of these were really revealing to us. For example, we heard from one interviewee:

““Bumping into walls or tables may seem like a problem to you, but for us it’s just how we learn where things are.””

We had thought that helping users navigate more smoothly without having to touch everything would be a major plus, but it became clear that we still had to overcome our bias as sighted people.

Academic Papers

Academia is often a test bed for applying new technologies to accessibility challenges, especially blindness; we found a plethora of papers examining various approaches. We chose about 20 papers to examine closely, split them between us, and dove in, recording major takeaways in a Google Drive spreadsheet and ranking the papers by applicability.

We organized our literature review sheet to ensure easy information recall and citation for the ~20 academic papers we examined. Image shows spreadsheet with categories for reader, title, date, authors, summary, notes, and relevance.

Some of the most interesting papers included:

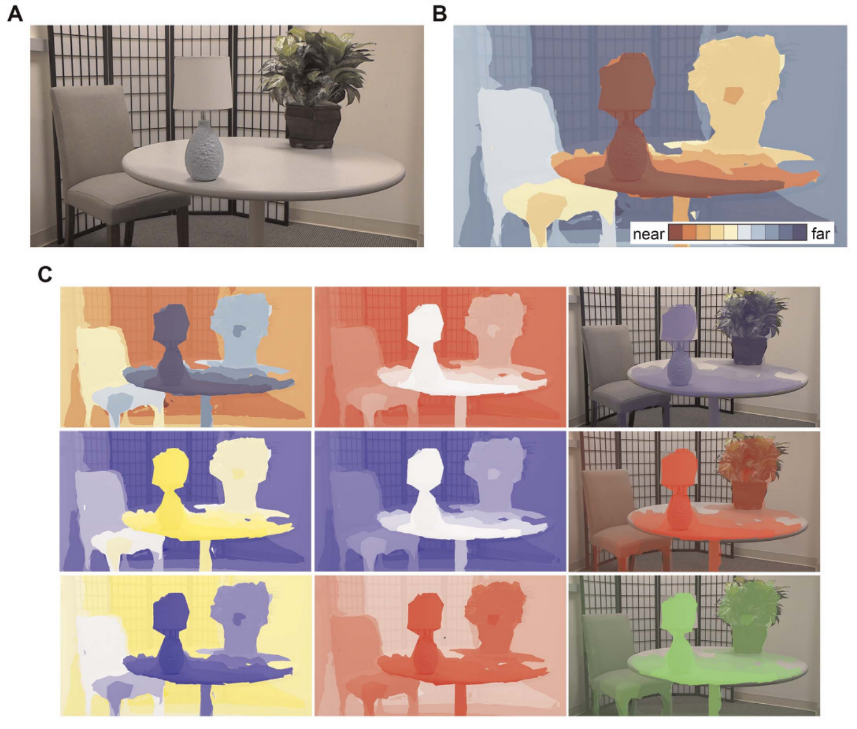

A) “Using an Augmented Reality Device as a Distance-based Vision Aid—Promise and Limitations,” Kinateder et al. A project from Dr. Emily Cooper’s lab, this explores using the HoloLens as a contrast-enhancement device to layer objects with color based on distance.

B) “Sonification of 3D Scenes Using Personalized Spatial Audio to Aid Visually Impaired Persons,” Bujacz et al. This examined how physical arrangements of objects could be captured and sonified for the benefit of visually impaired people.

C) “A HoloLens Application to Aid People who are Visually Impaired in Navigation Tasks,” Jonathan Huang. This researcher developed a “TextSpotting” application for the HoloLens to identify and make available text in the user’s environment.

D) “Augmented Reality Powers a Cognitive Assistant for the Blind,” Liu et al. This CalTech lab developed an assistant that could identify individual objects and guide users through a building on a precomputed path.

In addition to the subject matter itself, these papers often included open source code which we could utilize in our application, as well as offering guidelines for how to structure our user testing.

Products for Blind Users

The competitive landscape for products aimed at aiding blind user navigation held myriad products. Broadly, these fell into a few categories.

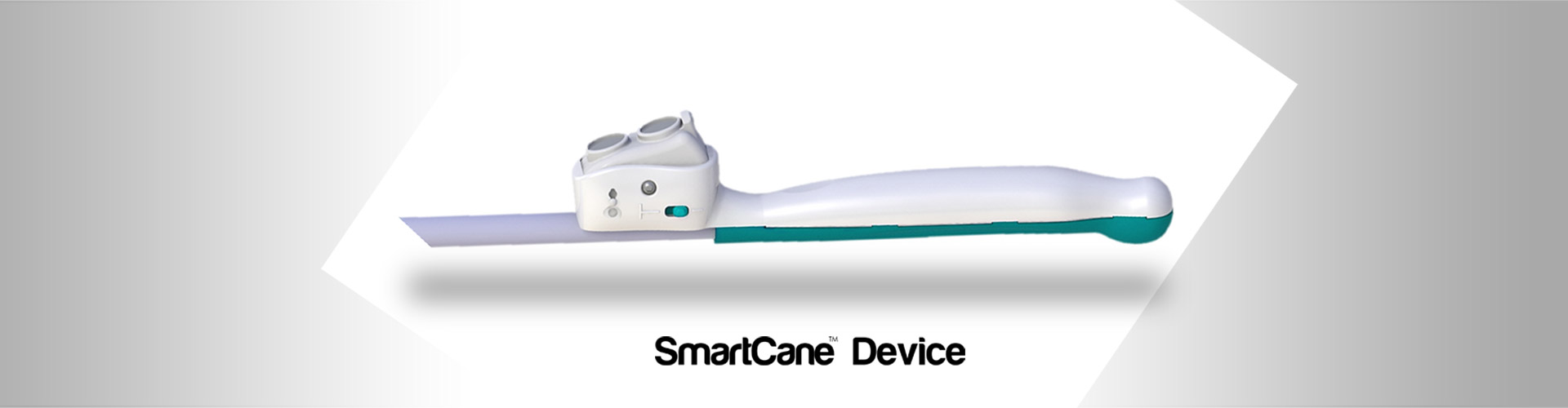

Obstacle Avoidance - Tools that help a user detect nearby obstacles and improve close range awareness. Includes the two classic tools, the white cane & guide dog, as well as various “sonic canes” like the SmartCane.

Navigation - Smartphone applications like Microsoft Soundscape or Google Maps that clue users into their surroundings and navigate them to objective.

Sight Enhancement - Tools that let users make better use of limited vision, including classic tools like magnifying glasses as well as more advanced ones like OxSight

Machine Vision - Tools that leverage AI to read text, discern colors, or identify other visual semantic information. May include object or facial recognition as well. Includes headsets like OrCam and apps like Textgrabber.

Human Assistance - Services like Aira & Be My Eyes that connect a blind user to a sighted human assistant. Leveraging humans is powerful but adds additional costs and privacy concerns.

We theorized that there might be a lot of value in a generalized head-worn device that could fulfill multiple roles. Just as a smartphone gains a lot of utility from being a phone AND a calculator AND a mapping device, etc., we thought that a generalized AR platform like the HoloLens could replace multiple single-use devices, and that the combination of these features might result in a sum greater than its parts.

Ideation: Probing Possibilities

Goals, Contexts, & Assumptions

As we started brainstorming in earnest, we found it helpful to lay out more clearly the possible design spaces we could focus on. For the HoloLens developer edition, that meant the following.

Goal Types

Exploration - trying to gain a general sense of what’s around them

Destination - trying to seek out a particular place or object

Constraints for Current Headsets

Due to cost, menu inoperability for blind users, and battery life concerns, HoloLenses would have to be set up by sighted helpers and loaned to blind users

Due to poor scanning in sunlight and slow scanning overall, spaces would have to be indoor and likely pre-scanned or equipped with beacons

Plausible Locations

Relatively controlled, indoor spaces.

Large enough to warrant navigational aids.

Employees to help manage the devices.

Stores, airports, malls, and museums topped the list.

Present or Future?

In determining the design space, we had to figure something out: were we designing for the HoloLens’ present capabilities, or for some more robust yet-to-be-revealed AR headset? We knew based on the HoloLens limitations (cost, scanning speed, etc. - see “Constraints” above) that it wasn’t something blind users would be able to buy and use themselves in uncontrolled conditions. We could have designed for specific implementations, such as finding a particular item in a grocery store, that fit those constraints.

“Were we designing for the HoloLens’ present capabilities, or for some more robust yet-to-be-revealed AR headset?”

Ultimately, in looking for challenges to focus on, we veered towards the Perkins School for the Blind Bus Stop Challenge: helping users locate and identify bus stops. Considering that the HoloLens couldn’t operate in sunlight, and that a user operating the HoloLens to navigate public transport by themselves didn’t fit into the indoor, loaned device scenarios that were more likely in the short term, this may have been an incongruous choice. However, it had the benefits of being a concrete challenge identified by the blind community, being relatively easy to simulate, and likely having lots of transferable applicability.

The Singing Mesh

Going into the initial hackathon, our central idea was sonification of the 3D mesh that AR headsets construct of the environment - aka “the singing mesh.” Much in the way bats, dolphins, and even some humans can use echolocation to determine the locations of objects in their environment, we thought that by producing spatial sound at the location of scanned objects, we could increase blind user awareness. The overall effect would be of the whole mesh “singing” to the user to inform them where things are, giving even typically silent objects like tables and chairs a voice blind users could hear.

The HoloLens, Magic Leap, and other advanced headsets construct environmental meshes as the user scans.

By sonifying objects detected in the scans, we hoped to increase the user’s awareness of their environment.

Text Recognition

From our discussions with blind people, we knew that gathering semantic information would be important, and one type clearly stood out from the rest: text. Braille is vanishingly rare and hard to find compared to the absolute abundance of written text, with text often being the only way to differentiate, say, the office you want to get to from the dozen identical ones next to it.

Even then, it wasn’t obvious how text recognition should ideally behave. Simply reading out all text around the user as soon as its spotted could have downsides - imagine a user hearing a billboard text instead of an oncoming car when crossing the street, or being flooded nonstop with product labels in a grocery store.

In keeping with our “sound is a valuable resource” user research finding, we decided that text recognition should either be manually triggered by the user, or toggled to look for a particular word. Due to development constraints, we only managed to implement the former.

Other Considered Features

Some other features we considered developing, but remained on the shelf:

Guidance sound - a tone that users could assign to a location or object, then let them home in on it; similar to a feature in Soundscape. Could alternately be implemented as an “anchor sound” to let users mark a place they’ve been, or a “compass sound” to let users know which way is north. Based on user stories of disorientation in rooms with symmetrical layouts or places with few landmarks like parking lots.

Obstacle warning - an alert sound that plays when the user is about to run into a head height obstacle, based on user stories of finding obstacles with their face that were too high for their cane. (A common occurrence, and why many blind people wear baseball caps.)

Perimeter scan - a sound that would trace the edge of the room the user is currently in; a quick way to build spatial understanding. Deprioritized after several users told us they could understand room boundaries simply through sound quality.

Voice notes - users or administrators could leave voice notes for themselves or other users at specific locations; an asynchronous means of leveraging crowdsourcing and human intelligence.

Object recognition & other machine learning - using machine vision to recognize things besides text, such as objects, faces, or colors.

Be My Eyes integration - integrating with Be My Eyes or another human assistance program to leverage human optical systems

In the end, we had to cut scope pretty aggressively to match our technical talent and timeline, and while all of these offered interesting supplements, we felt that obstacle sonification and text recognition were the most powerful core value tools.

Greater Than the Sum of its Parts

Alone, obstacle detection and text recognition are each useful. However, we hoped that both together could become greater than the sum of its parts. Consider the bus stop challenge:

Obstacle detection alone could find tall, narrow objects, but not distinguish between trees, parking limit signs, and the bus stop signs the user is actually looking for.

Text recognition could read signs to verify that the user is in the right place, but without assistance, users may have a hard time finding said signs.

By pairing the two together, we felt confident we were onto something good.

Development: Mastering the Mesh

Scope and Project Management

By the time we got all of the resources necessary for development, we had less than three months to create working prototype, test it, and write up our findings. That meant we had to keep a tight schedule and a careful lid on scope.

I kept us organized with a simple timeline in Google Sheets that gave us a standard for where we needed to be at, and an Asana project management board split according to our three teams.

The scope we settled on for our MVP, and the realm of our first two teams, was:

Functional obstacle detection that could make users more aware of their surroundings.

Straightforward text recognition that a user could manually activate if they suspected text was in their field of view.

Our final team would study the complex sound design required for both of these features to function in an all-sound application.

Divide and Conquer

I led the obstacle recognition team, as well as managing most of the integration process. Here’s a brief overview of the focus of each team; more detail can be found in pages 16-25 of our report. We did all of our development using Unity 3D.

Obstacle Recognition

Accessed spatial mesh, split into planes and other obstacles

Distributed digital “Obstacle Beacons” across mesh to attach to obstacles and make noise

Controlled firing of beacons based on user gaze and movement

Text Recognition

Accessed camera, manually taking pictures on command

Sent photos to Google Vision API to extract text

Placed “Text Beacons” in environment based on camera field of view when picture was taken

Sound Design

Determined sound profile of obstacle and text beacons to maximize utility while minimizing discomfort or distraction

Experimented with embedding information in sound, such as indicating obstacle height via pitch

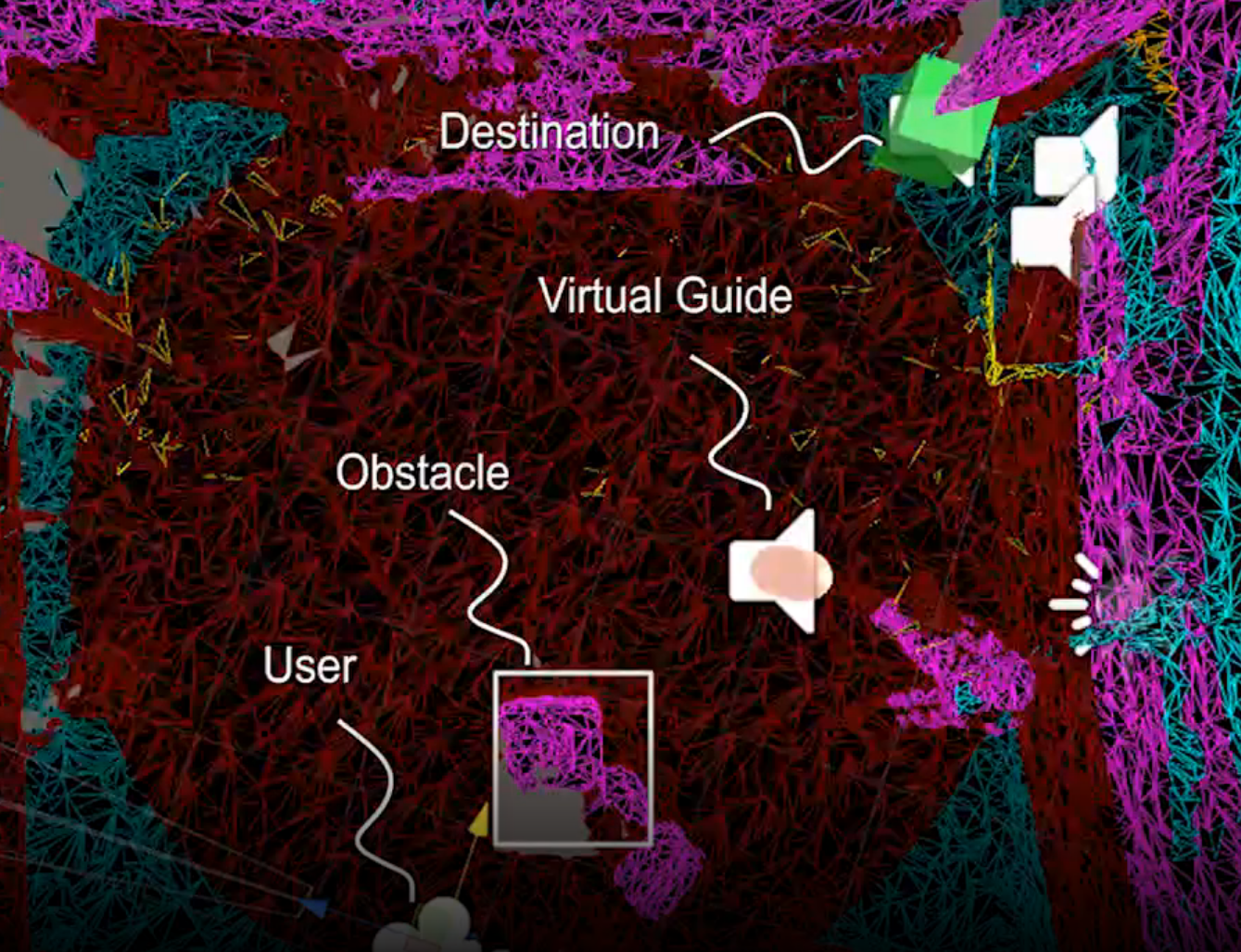

In this image of a sonified room, red beacons make one sound representing obstacles, while yellow beacons make a more subtle sound representing walls. Grey beacons are outside the user’s gaze and are silent.

Within obstacle recognition, our goal was to sonify each obstacle without having to identify it. We knew that identifying individual objects as chairs, laptops, etc. would take a lot of computation; instead, if the HoloLens know that there were some meshed obstacle the user would have to navigate around, we wanted the user to know that too. Since we couldn’t just sonify the whole mesh directly, we instead chose to spread audio beacons across it, each of which would make a noise; the HoloLens’ spatial sound capability would then help users understand where objects were. My teammate Rajandeep handled most of the mesh processing, while I focused on instantiating and raycasting obstacle beacons to achieve a uniform distribution over the mesh.

This involved multiple iterations of code; for example, when our raycasts kept missing narrow objects like poles, we switched to spherecasts that could catch them. When that resulted in objects next to the user accidentally triggering beacon placement, we found and switched to Walter Ellis’ conecast script.

Similarly, when we found it was hard to distinguish between obstacles and simply the contours of the room, we identified the walls in the mesh and made sure that beacons placed on them used a different, more subtle sound, so users could differentiate more interesting features of a room. (The sound design team chose the actual sounds used; I made sure that the correct beacons would be placed.)

Integration and Interface

In addition to my role in obstacle detection, I integrated the work of the rest of the team and developed the user interface to tie everything together. This included:

Ideating ideal interface elements based on user research

Creating a code structure that my teammates could plug their scripts into

Experimenting with voice and gesture input

Understanding the text recognition coroutines and API calls and how to integrate them

Enabling the sound design team to apply and test various sound profiles

From our research, users wanted different kinds of input modalities: voice could be used hands free, but gestures or remote input could draw less attention and work in loud areas. However, the HoloLens 1 had limited gesture interpretation and remote control inputs, so for the MVP I relied solely on voice input.

Lessons Learned during Development

Designers often talk about the need to prototype early and often, and this project is a perfect example. We had been sure the singing mesh idea had promise, until we tried it out and realized that when EVERYTHING makes a sound, you can’t tell where ANYTHING is! We realized pretty quickly that we had to be more selective with what we were sonifying, and introduced a filter based on where the user was looking.

“Building a prototype helped us realize that our original idea would NOT work as initially designed. Thanks to that, we could make adjustments sooner rather than later.”

Prototyping also helped us become aware of certain design tradeoffs. For example, it turns out the farther away you can hear obstacles, the harder it is to tell when you’re getting close to them. We could adjust the volume curve so that users could either hear obstacles far away, OR hear an audible difference in volume as they got closer. Ultimately we created a “proximity mode” toggle that could switch between the two curves; in the future, we’d like to make it more dynamic and responsive to the user’s movement.

User Testing: Putting the Prototype through its Paces

Testing Goals

Our goal with the user testing was to test the core value proposition of the application in a controlled environment. That is, if we imagined users had an ideal version of the application on advanced hardware, would it be useful? As mentioned above, we were more concerned with building a proof of concept for future AR accessibility applications than testing the viability of the HoloLens 1 in the present.

Specifically, we wanted to understand:

Would users be able to identify and navigate to and around obstacles?

Would users be able to identify and read text at a distance?

Would these features would enhance each other’s usefulness?

What did our users consider high priority in these and potential new features?

I’ll note that my role in the user testing was limited to note taking and technical support; our user researcher Anu Pandey handled most other elements.

Testing Process

Our testing setup, designed to recreate the challenge blind people face identifying the correct bus stop. Here we see visually impaired web access evangelist Lucy Greco testing our interface.

As we had chosen to simulate the Perkins School for the Blind Bus Stop Challenge, our testing environment was a relatively open area with several simulated bus stops - poles with signs like “Bus Stop 52,” “Bus Stop 80,” or “Watch for Pedestrians.” We couldn’t use real bus stops because of the sun’s interference with HoloLens scanning, as well as safety and background noise concerns.

We had users attempt four tasks:

Object Localization - Identify the locations of the three sign poles using audio cues.

Text Recognition - Identify bus stop 52.

Direct Navigation - Walk to bus stop 52.

Text Recognition and Direct Navigation in Proximity Mode - Switch to “proximity mode,” which would limit detection range but give the user a better indication of changing distance, then identify and walk to bus stop 80.

After that, we asked them some qualitative questions around their experience, useful potential features, and scenarios in their life they could imagine this kind of application being useful.

Testing Results

Ultimately, we tested the application with 7 blind participants. Overall, we got a lot of positive feedback that made us feel like our core concept had strong potential. Users were able to identify the direction of the bus stops and read the text on them moderately reliably; they found the sound design useful rather than annoying and the commands easy to learn and self-explanatory; and they were able to imagine multiple scenarios in their life where the device would be useful.

However, we also learned of some critical roadblocks that would have to be solved if this were to ever be useful in day to day life.

Poor ergonomics - Every single user felt that the HoloLens 1 headset was heavy and uncomfortable, as well as worsening their sense of air currents and echoes that normally let them sense their surroundings. Several asked for a headband-style device that would not cover their face.

Unintuitive gaze control - Several of our blind users simply were not used to pointing their head towards areas they wanted more information about, and had to be reminded to do so. This also became a problem for pointing the device towards text to be identified, as combined with the weight of the headset, users often let their head droop towards the ground.

Low speed of mapping and text recognition - The several second delays in mapping and text processing required a controlled, pre-scanned environment and repeatedly frustrated our users, especially when they failed to identify text on the first scan.

Future Directions

Future Features

We had come up with many features during ideation (see "other considered features," above) but based on feedback from user testing, a few came to the forefront. Improved text recognition was first and foremost among them: users wanted to be able to set the app to look for certain text, then stay silent until it was found. This would avoid the pain point of having to repeatedly scan for text and wait for analysis.

We also received requests for more unusual features, such as queue following. Our testers told us it can be very difficult as a blind person to stay in a queue at a grocery store or ticket window without treading on the heels of the person in front of you; they suggested the HoloLens might be capable of assisting.

Along with improvements to our core obstacle sonification feature to improve the speed of users honing in on obstacles and tracking distance to them, our team continuing app development at Virtual Reality at Berkeley will have a lot to work on!

Economic Opportunities for Implementation

In addition to the interface itself, we considered some potential realistic contexts for the app based on economic analysis of the visual assistance competitive landscape. I conducted this analysis along with a classmate, Jay Amado.

We considered four classifications of the players in this landscape:

Customers - Organizations that would purchase the app or AR headsets.

Competitors - Other assistive technologies that might compete for sales of our application.

Complementors - Companies and tools that could synergize with our application.

Dependencies - Resources necessary for the development and functioning of our app.

Though this analysis didn't directly affect our MVP development, we felt it an important tool to help move the project from the realm of simple proof of concept towards a real possibility for improving the day to day lives of blind and visually impaired people.

On a similar note, I also wrote a separate paper examining the economic and legal implications of crowdsourced spatial mapping, looking at privacy concerns, economic incentives for data sharing, and other issues that would inevitably crop up were this project to be realized.

Retrospective

Accessibility Awakening

If there’s one thing I take away from this project, it’s the huge challenges faced by people with disabilities in getting technology to work for them, and the mirrored and far too often neglected challenge to creators to design and develop technology in accessible ways. Even after months of studying accessibility for blind and visually impaired people, I’m well aware that I’m still a novice when it comes to designing for them, let alone for people with intersectional disabilities like blindness combined with deafness or motion impairment.

It's amazing to me now how many common apps take the user's abilities for granted, and simply fail to function for millions of people that don't have those abilities. The fight for better design for all has really resonated with me, and I intend to keep learning how to design accessibly and to fight for the implementation of accessible design everywhere.

Project Management Powerup

Most of the projects I've worked on in my professional and academic career have been assigned to me. This one I chose for myself, recruited others to my cause, and we decided collectively what we wanted and could accomplish. This demanded more of me as a leader and PM than I've given before, and I feel like I grew into the role. I learned how to convince people of the importance of my vision, and how to lead and manage my teammates to accomplish that vision.

Final Feelings

I'm proud to have flipped the script on the typical use case for augmented reality in order to find a new way to try to help people. Though I know our work isn't as refined as some of the official products and longstanding research projects out there, and that AR won't be affordable or usable to blind people for some time yet, I maintain hope that this project will become a valuable contribution to the accessibility landscape and serve as an inspiration for others. I know it has for me.

Icons via the Noun Project.

AR for VIPs logo — Lloyd Humphreys

Blind man silhouette — Scott de Jonge

AR icon — Meikamoo

Stopwatch — Tezar Tantular

Bankrupt — Phạm Thanh Lộc

Blind user access — Corpus delicti

Obstacle Recognition - Adrien Coquet

Text Recognition - Thomas Helbig

Sound Design - Yana Sapegina

Queue - Adrien Coquet